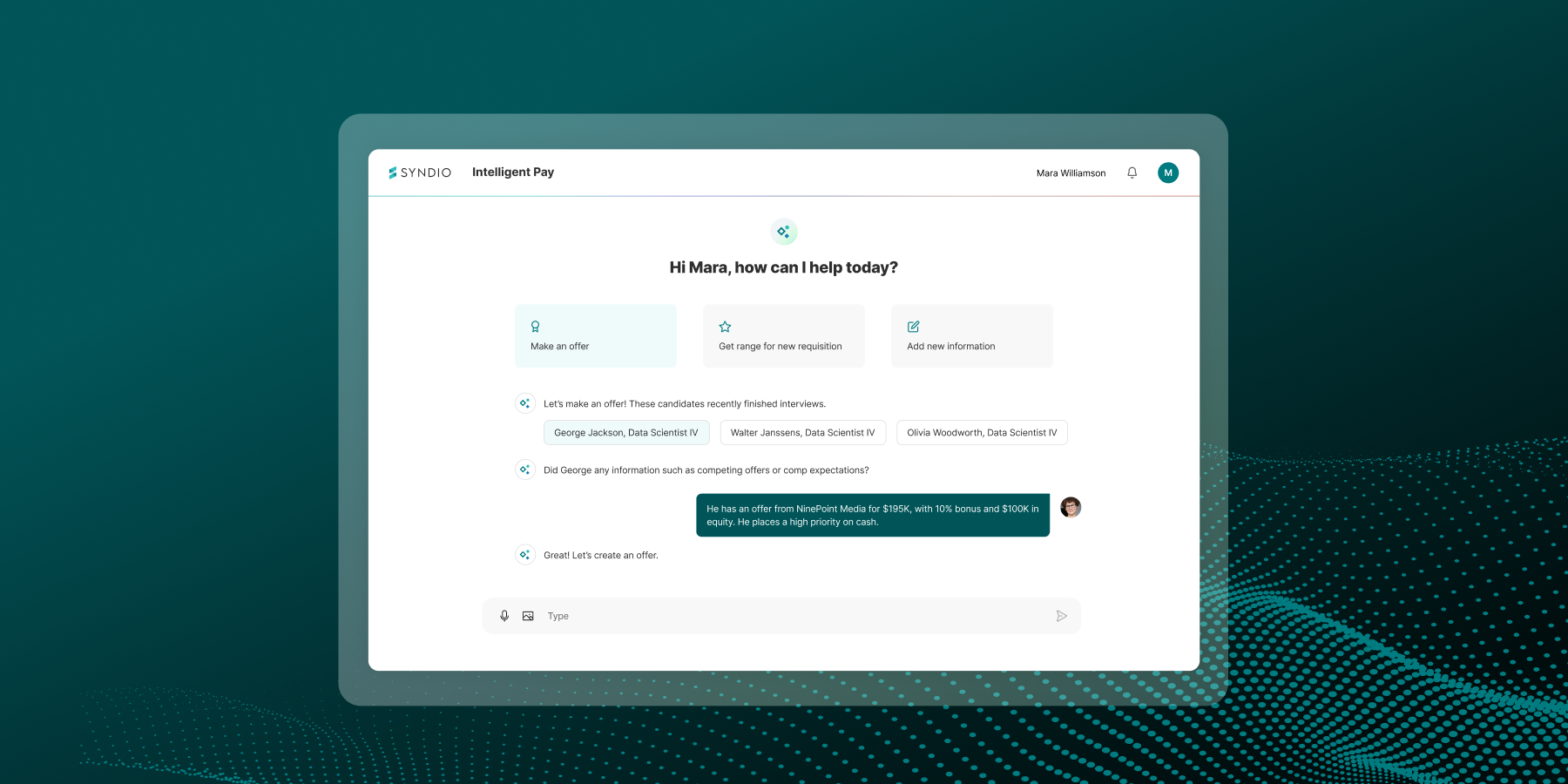

Statistics is both science and craft — and like other crafts, there are a lot of tools available with many that are right for one job and wrong for another. In pay equity analyses, it is best to use the right statistical tool at the right time to get meaningful and reliable insights. Under some circumstances, it is imperative to apply thresholds that are consistent with applicable laws and regulations. A new feature of our PayEQ® pay equity analysis software puts you in the driver’s seat to make the right choice as you weigh these considerations.

In PayEQ, you can now modify the threshold used to determine whether a group meets size requirements for a robust statistical analysis. We call this the “small group threshold.” This article reviews the advantages and disadvantages of lowering the headcount requirement.

Should you conduct a regression analysis or perform a cohort review?

Broadly, there are two approaches to analyzing a group in a pay equity analysis: a regression analysis of the group in question (suitable for large groups) and a “cohort review” with supporting statistical tests (suitable for small groups). A key consideration in deciding which test to use is how many employees are being evaluated.

For groups that are “large enough” for robust statistical analysis, the modeling technique called “multivariate regression” may be applied to identify whether and to what extent peoples’ compensation varies because of their protected category status (e.g. being female), after taking into account things that matter to pay, like job level, educational attainment, length of service, or location. In general, for regression models (as well as most statistical models), the more data put into the model, the more usable, stable, and reliable the results.

For smaller groups, employers often conduct cohort reviews. Cohort reviews involve the comparison of an individual’s pay to others of another class who could be “comparators” for them. Comparators typically perform similar work and are similarly qualified. Cohort reviews without the aid of technology can be labor intensive, particularly as groups get large. It also may be difficult to find suitable comparators during a cohort review, so typically they involve a lot of qualitative eyeballing and gut-checking of pay differentials. Certain statistical tests can help provide some guidance in these small groups. In our pay equity analysis software PayEQTM, we leverage median tests, t-tests, and fixed effect regressions (which leverage information from the group in question as well as other groups) to help make cohort reviews more effective.

What is the “right” group size for a regression analysis?

How many employees are “enough” to conduct a multivariate regression?

There are some mathematical necessities (e.g. you can’t run a regression on one person, or analyze gender differences in groups that are all men or all women), but even when the math “runs”, the results are not necessarily stable, usable, or reliable. Think of a common example: flipping a coin. If you flipped a coin 10 times, and end up with 6 heads and 4 tails, you would not think you had a biased coin. If just one of those tosses had gone the other way you’d be at precisely 50/50. But what if you flipped the coin 1,000 times and ended up with 600 heads and 400 tails? Or 10,000 times and ended up with 6,000 heads and 4,000 tails? In those instances, you would have more confidence that the coin was biased towards heads.

There is no bright-line approach. All labor economists and statisticians agree that some groups are just too small to analyze with any reliability. When we built our pay equity analysis software PayEQ, we set thresholds of 30 individuals overall in the group and 10 from each category. For example, we would run a regression testing for a gender pay gap if a group contained at least 10 men and 10 women, and at least 30 workers overall. Smaller groups leverage other statistical tests when available — but we recommend our customers conduct the more manual cohort review.

Sometimes, however, the 30/10/10 threshold is not ideal. Some customers who are federal contractors wanted more flexibility with these thresholds to align with the methodology used by the U.S. Department of Labor’s Office of Federal Contract Compliance Programs (or OFCCP), which is the government agency responsible for ensuring that employers doing business with the Federal government comply with the laws and regulations requiring nondiscrimination. The OFCCP has lower requirements before they conduct a linear regression. Specifically, they conduct this analysis if there are at least 5 members of each group (for example, 5 men and 5 women) and 30 employees overall. This has taken on increasing importance in light of OFCCP’s revised Directive 2022-01 and their recent proposal to amend the scheduling letter and itemized listing to require additional information about the compensation analysis be provided with the initial scheduling letter submission.

We are making that option available to PayEQ users. You can now modify the threshold used to determine “small group threshold” to 30/5/5 or you can pick the current threshold of 30/10/10.

What are the advantages and disadvantages of the different thresholds?

So, what are the pros and cons of lowering this limit from 10 to 5? The pros are obvious. First, with more groups eligible for analysis, you can have PayEQ run regressions on more groups, meaning more employees are subject to regression analysis and fewer cohort reviews are required. Second, if you are a federal contractor, following OFCCP guidelines makes sense.

The cons have to do with the statistical analysis itself. First, the results are much less robust and stable, and can be highly sensitive to an individual being added, removed, or receiving a pay increase within the group — just like the example of one coin toss landing the other way. This doesn’t mean that pay equity issues involving these individuals should not be identified and addressed. It means that a cohort review might be better suited to identifying the issues rather than a relatively volatile statistical test.

The second con has to do with something we call statistical power. Basically, a group with five individuals is more likely to give a “false negative” than a group with ten. Let’s briefly discuss what this means in this context.

What is statistical power and why does it matter in the context of a pay equity analysis?

I hope this doesn’t cause painful flashbacks to a statistics course, but please bear with me. Two statisticians developed a framework (called the Neyman-Pearson approach) that highlights a key tension in any statistical test. There are two ways to be wrong: you can give a false positive or a false negative. If you want to reduce the odds of being wrong in one way, you increase the odds of being wrong in the other.

Type I (False Positive): Rejecting the null hypothesis incorrectly, i.e. our results show a statistically significant pay disparity when any observed differences are just random. Denoted by alpha.

Type II (False Negative): When we don’t reject the null hypothesis, i.e. our results show no statistically significant pay disparity when the pay process is biased to favor one group over another. Denoted by beta.

The standard threshold for statistical tests is alpha = 5%. This means that we refer to a result as “statistically significant” when there is a 5% chance of a false positive. This is also the universally accepted threshold used for pay equity analyses. It means there is strong enough evidence of a class-based pay discrepancy to reject the idea that the difference we see is due to random chance.

So, we keep alpha constant at 5%, regardless of our sample sizes. This has implications for the other way we can be wrong — or the probability of delivering a false negative.

The impact of sample size thresholds on statistical power in a pay equity analysis

The heat maps below show the probability of giving a false negative under the two sample size thresholds, meaning in one case we have 5 members of the impacted group and in the other we have 10. Other factors are the same between the two heatmaps. The overall sample size increases from left to right, and the “true” amount of bias in the group increases from bottom to top. Technical note: one other constant factor is baked in here, which is the variability of pay — we assume a 4% standard deviation of percent error.

Here, red is “good” — it’s the probability of you flagging a group as having an issue when an underlying issue exists. Green groups are unlikely to be flagged, even though a class-based difference exists in how pay is assigned in those groups.

The takeaway is that the higher requirement means you are more likely to identify real issues, while with the lower requirements you are much more likely to miss them! The worry here is that the test may give you the green light — “nothing to see here” — and you decide not to conduct a cohort review, meaning you miss pay equity issues that you might have caught otherwise.

What does this mean for your pay equity analysis and your OFCCP audits?

Lowering the sample size threshold means you’ll be able to run statistical tests on more of your comparison job groups. For federal contractors, this also means you can align to the same thresholds used by the OFCCP, as appropriate. We designed statistics to systematically use data to answer the sorts of questions we’re looking at in a pay equity analysis — so using statistics is usually better than manually reviewing and comparing specific data points.

However, there is a case for reviewing and comparing specific data points! For small datasets in particular, cohort reviews extend the pay equity analysis to areas where statistical tests are likely to have very low power — meaning a manual review would raise issues and the test would indicate that there was not a systemic concern.

That said, you can have it both ways. Statistical tests will still raise the alarm in some cases, particularly when the pay differences are large. Even for groups that remain “green”, the p-value of the test can provide guidance on which groups are more likely to have potential issues, so you can continue to conduct cohort analyses in those groups.

Want to learn more about PayEQ or see the new Small Group Threshold feature in action?

The information provided herein does not, and is not intended to, constitute legal advice. All information, content, and materials are provided for general informational purposes only. The links to third-party or government websites are offered for the convenience of the reader; Syndio is not responsible for the contents on linked pages.